A theory of evolution first appeared in the domain of biology, not in physics or chemistry, because “biology is the study of complicated things that give the appearance of having been designed for a purpose”, while physics and chemistry deal with rather simple things and principles that do not evoke design (Dawkins 1986: 13).¹ In fact, the word ‘design’ was frequently used in the 18th century and later in theological arguments about the existence of God, asserting that the intricate adaptations of living things imply that they were ‘designed’ by some rational ‘designer’ (Michl 2002).²

The most famous example of such arguments is the watchmaker argument of the eighteenth-century theologian William Paley. Paley argues in his Natural Theology that if he were to find a stone on the ground, he would not bother explaining how it got there; but if he finds a watch lying on the ground, with all its cogs and springs perfectly put together to function, he would definitely conclude

that the watch must have had a maker: that there must have existed, at some time, and at some place or other, an artificer or artificers, who formed it for the purpose which we find it actually to answer, who comprehended its construction, and designed its use. (1802: 6)

Paley was right that the existence of design needs explanation, but he was wrong about the explanation itself (Dawkins 1986: 4). It was British naturalist Charles Darwin – “the towering design theorist of the 19th century”, according to design historian Jan Michl (2006) – who formulated the right explanation and showed that design is the inevitable output of the algorithm of evolution (Blackmore 1999: 12).

—

1 See Dennett (1995: 124–35) and Dawkins (1986: 1–18) for discussions on how to define and ‘measure’ design.

2 This kind of empirical proof for the existence of God is referred to as the ‘Argument from Design’. See here for a logical critique of it.

Darwin’s Insight

Theories of biological evolution are detailed multi-level explanations of how different designs emerge in nature over the course of millennia without a ‘designer’ or any other kind of foresight. Although modern theories consist of vast improvements on what Darwin wrote in The Origin of Species in 1859, the skeleton remains unchanged (Dennett 1995: 48). Here is how Darwin originally depicted that skeleton:

If during the long course of ages and under varying conditions of life, organic beings vary at all in the several parts of their organization, and I think this cannot be disputed; if there be, owing to the high geometric powers of increase of each species, at some age, season, or year, a severe struggle for life, and this certainly cannot be disputed; then, considering the infinite complexity of the relations of all organic beings to each other and to their conditions of existence, causing an infinite diversity in structure, constitution, and habits, to be advantageous to them, I think it would be a most extraordinary fact if no variation ever had occurred useful to each being’s own welfare, in the same way as so many variations have occurred useful to man. But if variations useful to any organic being do occur, assuredly individuals thus characterized will have the best chance of being preserved in the struggle for life; and from the strong principle of inheritance they will tend to produce offspring similarly characterized. This principle of preservation, I have called, for the sake of brevity, Natural Selection. (1859: 121) [my bolds]

It is not hard to notice that Darwin described the algorithm of evolution – using ‘if/then’ statements, but without having a concept of algorithm as we do now – in two parts, respectively:

- if (variation) and if (selection) then (adaptation)

- if (adaptation) and if (inheritance) then (evolution)

It is only a simple logic move to add up these two algorithms, substituting the adaptation in the second algorithm with its if conditions in the first one, resulting in:

if (variation) and if (selection) and if (inheritance) then (evolution)

Biological evolution is a material instantiation of the algorithm of evolution; biological design is the cumulative consequence of processes of replication, variation, and selection. But what is it exactly that is being replicated, varied, and selected in nature? What is the unit of natural selection? Darwin seems to think that the individual organism is the unit in question (“useful to each being’s own welfare”). Although, by mid-20th century, many biologists thought that species or groups of organisms were what was being selected – a problematic perspective called group selectionism, famously expressed by the phrase “for the good of the species” – and it took a “painful struggle” to return to Darwin’s ground (Dawkins 1982: 6).

It did not stop there, as the work of people like R. A. Fisher, G. C. Williams, J. M. Smith, W. D. Hamilton and R. L. Trivers, together with the advances in bioinformatics and computer technology, has caused another paradigm shift: a flip of the Necker Cube as Dawkins describes (1982: 1–8). Molecular biology supplemented evolutionary theories with a clear information-theoretic perspective (Nowak 2006: 28), and according to this new perspective, genes are the units of natural selection; information encoded in the genes is what is being replicated, mutated and selected. This view found its most clear expression in Dawkins’s distinction of replicators and vehicles, and the story of how they came to be – the story of how life on Earth began.

Dawkins’s Paradigm

According to the theory that Dawkins recites, the Earth was teeming with free-floating molecules before there was life; big and small, stable or unstable, haphazardly forming and degrading, affected by their chemical environments, sunlight, volcanoes, or thunders. One day, a curious molecule came to existence by chance – a molecule that acted like a mold or a template, and created copies of itself, which were in turn able to create more copies of the same structure. It was the very first replicator; thus “a new kind of ‘stability’ came into the world”. As opposed to the old way of molecule formation by chance, replicator molecules were actively spreading more and more copies of themselves – as long as the building blocks were available in the environment.

But this copying process was not perfect and when mistakes occurred, they propagated as replication continued, resulting in populations of varying kinds of replicators that had descended from the same ancestor. Dawkins states that this random variation was the second condition for the algorithm of evolution to work. Because these mistakes were random, many of them were deleterious: they decreased the stability of the molecule, or its capacity to replicate. On the other hand, some resulted in more stable molecular structures, or faster or more accurate replication. These variants proliferated at the expense of the others as they used up all the free-floating building blocks. This was natural selection on the job, the third component of the algorithm of evolution. Further mistakes of these ‘successful’ molecules resulted in even more accurate or faster replicators, and this process continued as ‘good’ mistakes accumulated and bad ones died out in the unconscious competition. Dawkins describes this growing versatility:

The process of improvement was cumulative. Ways of increasing stability and of decreasing rivals’ stability became more elaborate and more efficient. Some of them may even have ‘discovered’ how to break up molecules of rival varieties chemically, and to use the building blocks so released for making their own copies. These proto-carnivores simultaneously obtained food and removed competing rivals. Other replicators perhaps discovered how to protect themselves, either chemically, or by building a physical wall of protein around themselves. This may have been how the first living cells appeared. Replicators began not merely to exist, but to construct for themselves containers, vehicles for their continued existence.

Dawkins goes on to tell that some of these vehicles, or survival machines, continued to get bigger and more elaborate as millions of years passed. Some specialized in water environments, some exploited land, and some managed to fly. They include all living things on Earth that we know. Although they look and behave in hugely different ways, they still have one thing in common, and that is their original reason for existence: all of them preserve and propagate the replicators inside. We now call these replicators genes, and “we are their survival machines”. (1976: 13–20)

The paradigm shift that this theory caused is this: genes do not exist for the reproduction of organisms, but organisms exist for the replication of genes (thus the term vehicle). This difference is more than semantics because such a shift allowed sound explanations for previously incomprehensible features of biological systems such as non-reciprocal altruistic behavior or sterility in social insects (Futuyma 1998: 594–9). Dennett expresses what is different in this view with an analogy:

Lawyers ask, in Latin, Cui bono?, a question that often strikes at the heart of important issues: Who benefits from this matter? (…) The fate of a body and the fate of its genes are tightly linked. But they are not perfectly coincident. What about those cases when push comes to shove, and the interests of the body (long life, happiness, comfort, etc.) conflict with the interests of the genes? (1995: 325)

Such cases were somehow mysterious for biologists until the renowned evolutionary biologist G. C. Williams (1964) posited the gene-centered view to be adopted and famously presented by Dawkins in The Selfish Gene (1976). The theorem of the selfish gene can be summarized with one phrase: “An animal’s behaviour tends to maximize the survival of the genes ‘for’ that behaviour” (Dawkins 1982: 233). In other words, “when push comes to shove, what’s good for the genes determines what the future will hold” (Dennett 1995: 326).

Genes and Organisms

The genome – the complete set of chromosomes of a living thing – acts like a recipe for building the organism and making it work throughout its life, as “genes always exert their final effects on bodies by means of local influences on cells” (Dawkins 1986: 52). How to define a gene is a controversial issue. Dawkins derives his definition from Williams: “any portion of chromosomal material that potentially lasts for enough generations to serve as a unit of natural selection” (Dawkins 1976: 29). Douglas Futuyma gives the short definition in the glossary of Evolutionary Biology as “the functional unit of heredity” before adding that it is a complex concept and referring to the chapter 3 of the book (1998).

The term phenotype refers to the physical – morphological, physiological, biochemical, or behavioral – expression of the genotype (the genetic profile) (Futuyma 1998: 37). The relationship between phenotype and genotype is highly complex: many phenotypic traits are results of interactions of multiple genes (polygeny); one gene may have multiple effects on different traits (pleiotropy); and the expression of a gene is often controlled by other genes (epistasis) (Futuyma 1998: 42–56).

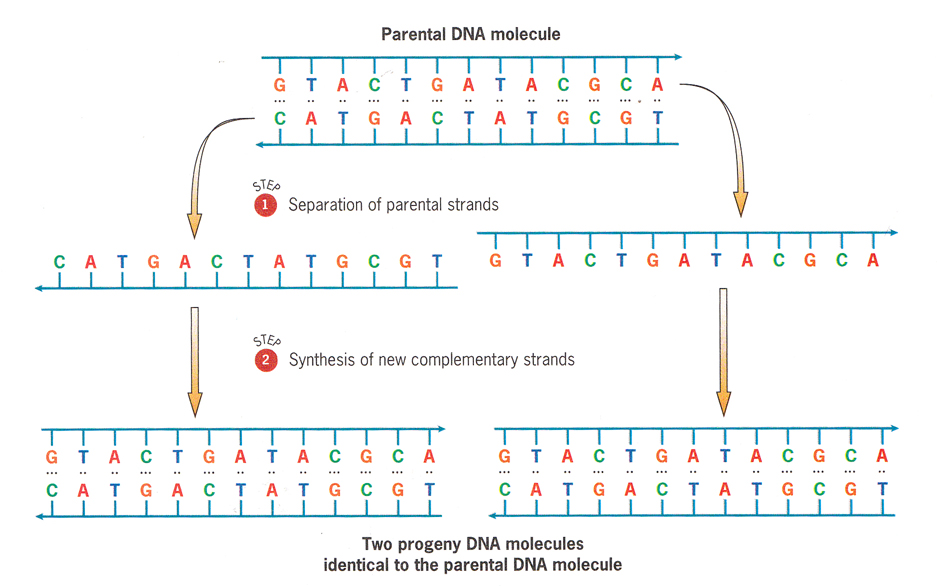

Snustad and Simmons explain in Principles of Genetics that genes are encoded with the sequence of four molecules called bases (adenine, guanine, cytosine, and thymine) along the deoxyribonucleic acid (DNA) chain, arranged in separate volumes called chromosomes. (Some viruses encode their genetic information in a slightly different chain, the ribonucleic acid; RNA.) This coding system is often referred to as a four-letter alphabet (A, G, C, T). DNA is a double-stranded molecule made of pairs of bases (A paired with T and G with C), so one strand of a DNA molecule is the exact complementary of the other.

Figure 3. DNA replication. (Snustad & Simmons 2003: 18)

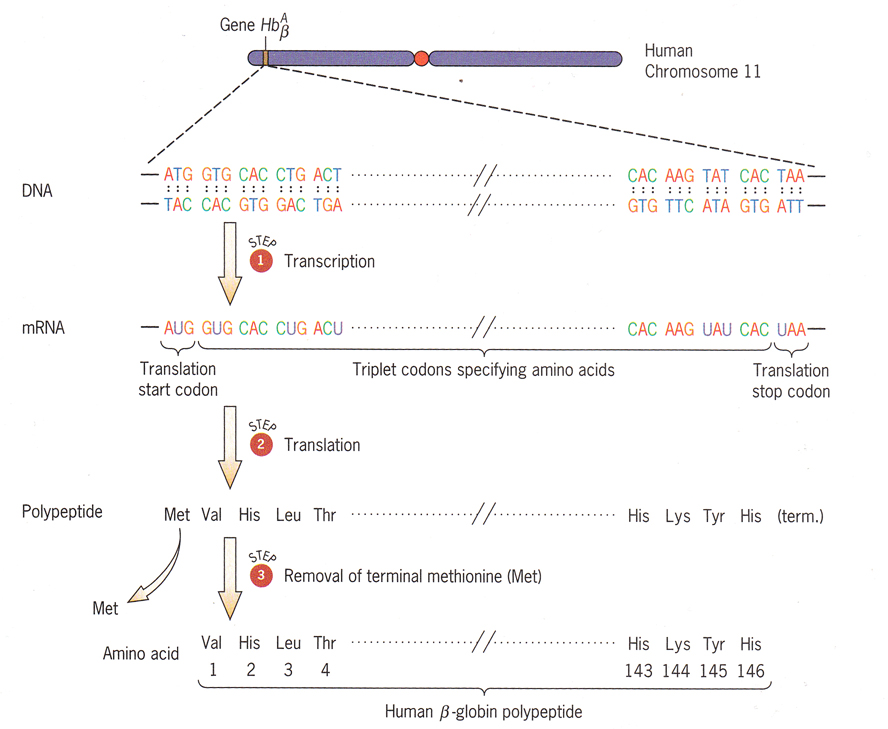

The genetic information is copied from parent to offspring and from cell to cell during development by an accurate replication of the sequence of bases in DNA (Figure 3). Every cell in a multicellular organism has the complete copy of the genome (except for the sex cells; see the footnote in the next section), but different parts of it are ‘read’ in different types of cells. The application of the recipe encoded in the genome takes place through complex processes called transcription and translation (Figure 4) resulting in the synthesis of proteins – large molecules made out of amino acids that “catalyze the metabolic reactions essential to life and contribute much of the structure of living organisms”.

Gene expression starts with transcription: the creation of a single-stranded RNA molecule corresponding to – in other words, complementing in base pairs – a sequence of bases on one strand of the DNA. (This RNA molecule is called mRNA since it acts like a messenger between DNA and proteins.) Then comes the translation where amino acids are put together to form proteins according to the sequence of bases on the mRNA molecule.¹ (Snustad & Simmons 2003: 17–8) The structure and the function of the protein depend on the order of the amino acids, thus on the sequence of bases on the mRNA molecule, thus on the sequence of bases on the DNA (Dawkins 1986: 120).

Figure 4. Transcription and translation. (Snustad & Simmons 2003: 19)

This causal chain explains what is meant by the phrase “genetic information encoded in DNA”. Dawkins (1986: 119–20) illustrates this encoding and decoding of information with a lucid analogy with computers:

When the information in a computer memory has been read from a particular location, one of two things may happen to it. It can either simply be written somewhere else, or it can become involved in some ‘action’. Being written somewhere else means being copied. We have already seen that DNA is readily copied from one cell to a new cell, and that chunks of DNA may be copied from one individual to another individual, namely its child. ‘Action’ is more complicated. In computers, one kind of action is the execution of program instructions. In my computer’s ROM, location numbers 64489, 64490 and 64491, taken together, contain a particular pattern of contents – 1s and 0s which — when interpreted as instructions, result in the computer’s little loudspeaker uttering a blip sound. This bit pattern is 101011010011000011000000. There is nothing inherently blippy or noisy about that bit pattern. Nothing about it tells you that it will have that effect on the loudspeaker. It has that effect only because of the way the rest of the computer is wired up. In the same way, patterns in the DNA four-letter code have effects, for instance on eye colour or behaviour, but these effects are not inherent in the DNA data patterns themselves. They have their effects only as a result of the way the rest of the embryo develops, which in turn is influenced by the effects of patterns in other parts of the DNA.

The genome length of different species varies greatly, ranging from about 104 bases for small viruses, to 3 × 109 for humans, to 140 × 109 for lungfish (Nowak 2006: 27). The DNA of a single lily seed or a single salamander sperm has enough information capacity to store the Encyclopaedia Britannica 60 times over (Dawkins 1986: 116). Although these numbers are large, very little of the genome actually encodes functional products.² For mammals in general, less than 10 percent of the DNA is functional (Futuyma 1998: 45). To pursue the analogy with computers, this amounts to about 30 megabytes of information for our species (around 5 percent of the genome) (Bentley 2001: 201).

The central dogma of molecular biology, first articulated by Francis Crick in 1958, states that genetic information flows (1) from DNA to DNA during its transmission from generation to generation and (2) from DNA to protein during its phenotypic expression in an organism. Although some viruses manage to partially reverse the flow as the viral RNA gets transcribed into the host’s DNA, the transfer of information from RNA to protein is always irreversible. (Snustad & Simmons 2003: 275) The changes in an individual’s phenotype that occur throughout its life thus do not get transcribed back into its genome. In other words, acquired characteristics, such as the muscles that an athlete develops, are not inherited to his children. (Futuyma 1998: 26)

Peter Bentley reminds us that this fact is the reason why the unit of selection is the gene and not the organism: when organisms reproduce, they do not provide their offspring with copies of themselves, they provide them with copies of their genes. If a person loses her left thumb in an accident, her future children will not be born without thumbs. Reproduction happens at the level of genes, not organisms. (2001: 49)

—

1 The mRNA molecule is read in codons, triplets of three adjacent bases, each assigned to one type of amino acid. Since there are (4 × 4 × 4) 64 different codons and 20 amino acids, the code is said to be “degenerate: two or more synonymous codons code for each of the most of the 20 amino acids.” (Futuyma 1998: 45)

2 This is a fact predicted by the selfish gene theory (Dawkins 1982: 156).

Mutations and Selection

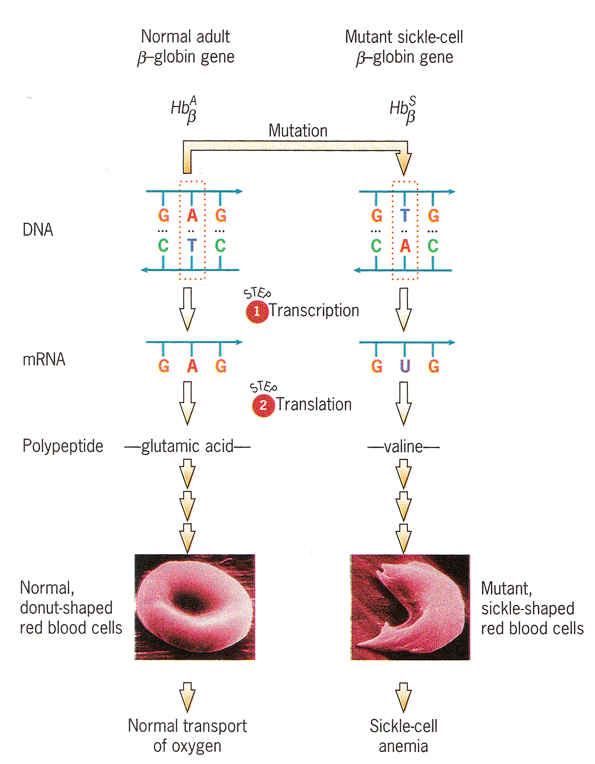

Futuyma expresses that the variation that biological evolution needs comes from the random mutations (mistakes in replication) that occur when germ cells that give birth to new generations are produced. In unicellular organisms, every cell is also a germ cell. In sexually reproducing multicellular organisms, gametes (e.g. sperms and eggs in humans) are the germ cells responsible for the creation of the next generation; mutations in the genes of these cells are copied to every cell of the offspring and expressed in its phenotype.¹ Mutations in the somatic cells (such as liver cells or skin cells) of such organisms do not have evolutionary consequences; they are extinguished with the organism’s death (1998: 267).

Figure 5. Mutation causing sickle-cell anemia. (Snustad & Simmons 2003: 20)

Futuyma explains that the rate of mutation is affected by environmental factors (e.g. chemicals, radiation). The phenotypic effects of mutations range all the way from undetectable to very great, and from destructive to beneficial. Those differences in survival or reproduction pass through the filter of natural selection or drift randomly if undetectable by natural selection. (1998: 26–7)

Mutation is not systematically biased in the direction of adaptive improvement, and no mechanism is known (to put the point mildly) that could guide mutation in directions that are non-random (…). Mutation is random with respect to adaptive advantage, although it is non-random in all sorts of other respects. It is selection, and only selection, that directs evolution in directions that are non-random with respect to advantage. (Dawkins 1986: 312)

For instance, a mutation in a tiger sperm may cause the offspring to have less sharp teeth. This new mutant gene will not last long in the successive generations as the mutant tigers will prey less efficiently and have less offspring. In other words, the gene will be eliminated by natural selection along with the tigers who carry it. Another mutation may result in sharper teeth, which will make the tiger kill prey more efficiently than others and hence have more offspring which will carry the new mutant gene. This mutant genotype will replace the old one altogether within the population as generations pass by. (Dawkins 1986: 122)

Maybe the most counterintuitive aspect of evolution is that variation is randomly generated by mistakes in replication. We humans tend to see randomness as something erroneous and unwanted within our technological paradigm, but in evolution, randomness is a way of exploring new avenues (Calvin 1987). It is true that random variation on its own cannot be responsible for all the design work in nature; it is when random variation is selected and accumulated over millennia that evolution happens. As Dawkins recapitulates, “mutation is random; natural selection is the very opposite of random” (1986: 41).

—